Recently i watched a video about regression and probability, Here i am presenting some information from that.

Regression analysis is about establishing relationship between variables. That means there will be set of independent variables and corresponding dependent variables.

Example

let us denote price of a house using variable Y and factors affecting the price, such as area,age,location using variable X.

To make everything more simpler, let us take only the area of house as the independent variable, so there will be only one feature. Now we need to model the price (Y) of house. So that we can predict the price of an house if someone gives us the area. We can use the following simple equation to model that

H(t0,t1,i) = t0 + t1 * X(i) -----------------> EQ(1)

t0 and t1 are constants.. ( when we have multiple factors, deciding the price other than area then it is possible to add more features to equation like 't0 + t1 * X1 + t2 * x2 + t2 * X1 * X2' or so on)

X(i) indicate sample number. That means if we have 100 data set containing 'area - price' pairs then X(5) will indicate the 5th area from the given data set.

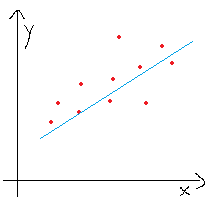

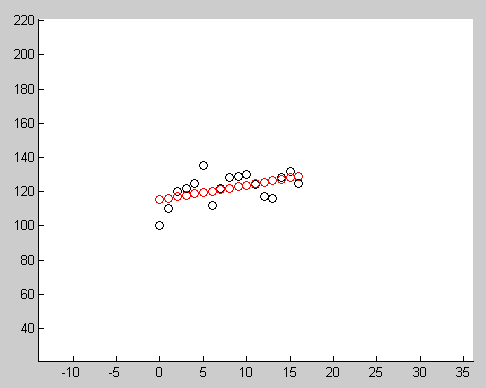

If we plot EQ1(1), it will be a line for sure. So basically we are trying to find a line which will match with the expected set of house prices. See the following image.. where you can see a line which can be used to predict house price on a X value.

EQ(1) can be changed to higher degrees to achieve more complex predictions. But simply adding more degrees will not help much. You also need to define the model in a more meaningful way.

Back to problem,

Now we need to find values for 't0' and 't1' such that it will help to form a meaningful model.

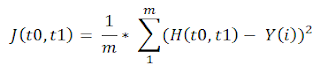

For that we need to define cost function

------------- EQ(2)

From EQ(2) we can see that , it is actually finding the sum of squared difference between expected value and our defined model (here it is based on line equation).

Aim must be to minimize EQ(2) , more we can minimize, less error will be , and our predicted line will be aligned more closely to the price data set. To do that we can use gradient descent method (other complicated solutions are also avilable, like conjugate gradient descent or BFGS , these are better than simple gradient descent , but complex to implement).

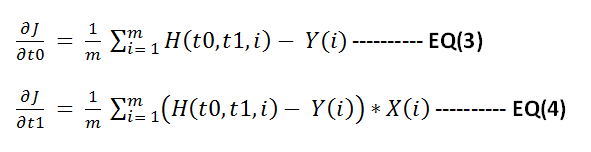

So for implementing gradient descent we need to find the gradient of function J with respect to t0 and t1 . You can use matlab to find that or find it manually. Following are the results

Now apply gradient descent to minimize function J. Below shown the steps to do this in matlab.

% X valuessampleX = [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16];% Y valuessampleY = [100 110 120 122 125 135 112 122 128 129 130 124 117 116 128 132 125];

% Y predicted using final model.resultY = [sampleY ];ss = size(sampleY);m = ss(2);resultC = zeros(ss(2),3);

cmap = hsv(1);

error = [];

theta0 = 50;theta1 = 1;

% main iteration loopfor i = 1:1:10000

% function HnewSampleY = theta0 + theta1 * sampleX;

deviation = sum((newSampleY - sampleY).^2);deviation = deviation / (2*ss(2));error = [ error ;deviation ];

resultY = [newSampleY];colorPoints = zeros(m,3);colorPoints(1: end,1) = cmap(1,1);colorPoints(1: end,2) = cmap(1,2);colorPoints(1: end,3) = cmap(1,3);%resultC = [resultC; colorPoints];resultC = [ colorPoints];

% derivative of J w.r.t t0theta0Gradient = sum(newSampleY - sampleY) / m;% derivative of J w.r.t t1theta1Gradient = sum((newSampleY - sampleY).*sampleX ) / m;

% applying gradient descent operation to minimize Jtheta0 = theta0 - .01 * theta0Gradient;theta1 = theta1 - .01 * theta1Gradient;

end

scatter([sampleX sampleX],[sampleY resultY],[],[ zeros(m,3); resultC]);axis([0 50 -100 100]);hold;

fprintf('\ncomputed error values\n');error

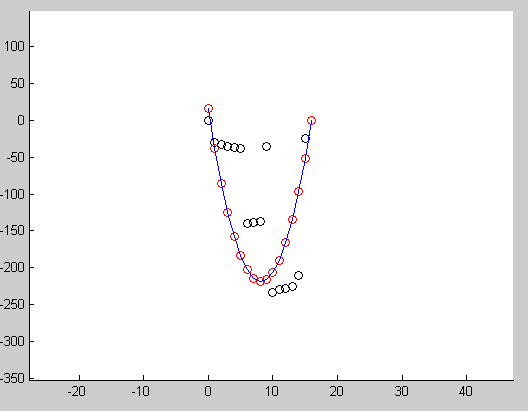

Below image show the result after running this code. Red circles indicate the points generated using final model, black circles indicates the input data.

Another example showing how we can use this to fit a quadratic curve to input points.